Overview

While we explored protecting virtual machines in SUSE Harvester in an earlier post, there were still some gaps before Veeam could offer full support. And we still have some additional testing and validation work to perform before we can offer full support of the platform, initial testing is looking good for with the recently released version 1.3.1. End users can now deploy Kasten into their Harvester environments and begin protecting their VMs with a few minor workarounds.

Let’s have another go

Resolved Issue 1: PVC Security Context

Previously, Longhorn bundled with Harvester had an issue around PVC security contexts that necessitated Veeam Kasten volume export pods be allowed to run as root to perform a backup to an off-cluster location (e.g. S3-compatible storage or NFS). While the Kasten engineering team was able to implement a quick workaround for proof-of-concept, the workaround couldn’t be released into the wild as it would have exposed a major security vulnerability into end user environments. We collaborated with the SUSE Longhorn engineering team to identify the issue and test a fix, and I’m happy to share that with the release of Harvester 1.3.1 which bundles Longhorn v1.6.2, the issue in question has been resolved.

Resolved Issue 2: Exposing Kasten Dashboard

The other issue we ran into previously was a robust way of exposing the Kasten dashboard. I had mistakenly stated that Harvester had no way of exposing a service outside of the cluster. Our friends at Rancher Government gently corrected me, noting that because Harvester ships with RKE2 under the covers, it also includes the Nginx Ingress. And because Kasten can support deployment via cluster ingresses directly via Helm values, we now have a robust way of exposing the Kasten dashboard without having to do port-forwards or other unnatural things. And to boot, the SUSE Harvester PM and engineering team confirmed that using the bundled nginx ingress to expose Kasten is supported. Using default settings, the Kasten UI can now be exposed using the Harvester VIP and appending /k10/ to the end.

Issue 3: Workaround for PVC mapping

One outstanding issue persists when using Kasten with Harvester, which is around an annotation on VM PVCs that upsets the Harvester admission controller when restoring a VM from backup or snapshot. Fortunately this can be worked around rather simply, using a Kasten Transform to remove the annotation upon restore, allowing the VM to be scheduled and booted:

cat <<EOF | kubectl apply -f -

kind: TransformSet

apiVersion: config.kio.kasten.io/v1alpha1

metadata:

name: harvesterpvcfix

namespace: kasten-io

spec:

transforms:

- subject:

resource: persistentvolumeclaims

name: harvester_owned-by

json:

- op: remove

path: /metadata/annotations/harvesterhci.io~1owned-by

EOF

Bonus Round: Considerations around Storage Classes

One thing to note that I didn’t really address in the earlier blog post was around how Harvester handles disk images and Storage Classes. In Harvester, if you upload a VM image to the cluster, that image will trigger the creation of a new StorageClass. Not a big deal in itself, but recall that Kasten requires any StorageClass that supports block mode PVCs to be annotated with k10.kasten.io/sc-supports-block-mode-exports=true. The operational headache around this is as users upload images to their Harvester cluster, new Storage Classes are created with the name longhorn-image-xxxxx, cloned from longhorn StorageClass, however the cloned StorageClass does not keep annotations that are defined within the “parent” longhorn StorageClass. As a result, end users will have to add the k10.kasten.io/sc-supports-block-mode-exports=true manually to every newly created StorageClass before they can protect/backup any VMs utilizing that image.

While not in itself particularly difficult, it does lead to some operational overhead and friction when using Kasten to protect Harvester VMs. We’re currently exploring options to address this, but first and foremost we have an ask into SUSE Harvester Engineering to just ensure the annotation is included in the clone. No commitments yet, but we’ll keep our fingers crossed!

Stop Boring Us, Get to the Chorus

To borrow a phrase from the great Berry Gordy, Jr, enough with the words already and get to the good part! So without further adieu, here’s how you can deploy Veeam Kasten to a Harvester cluster to begin protecting VMs:

-

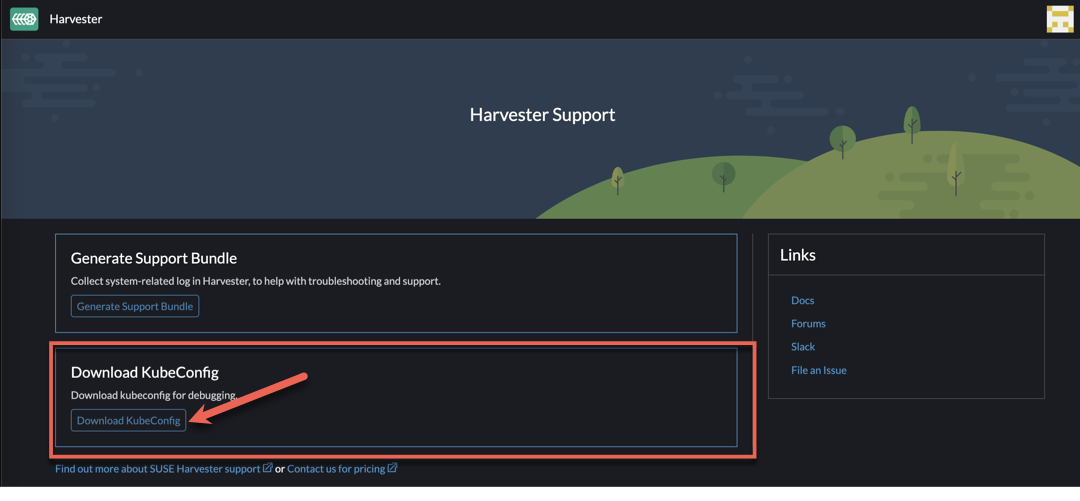

Connect to the Harvester Cluster via kubectl. To retrieve the kubectl config, navigate to Support > Download KubeConfig in the Harvester UI to download the kubeconfig YAML file.

- Annotate the two built-in storage classes and any other created longhorn-image-xxxxx classes so Kasten knows it can export block mode PVCs from them:

kubectl annotate storageclass harvester-longhorn k10.kasten.io/sc-supports-block-mode-exports=true kubectl annotate storageclass longhorn k10.kasten.io/sc-supports-block-mode-exports=true - Annotate the

longhorn-snapshotVolumeSnapshotClass so Kasten knows it supports volumesnapshot capabilities:kubectl annotate volumesnapshotclass longhorn-snapshot k10.kasten.io/is-snapshot-class=true

Ensure only the longhorn-snapshot volumesnapshotclass is annotated and not the longhorn volumesnapshotclass, as otherwise you may experience issues when attempting to perform backups

- Install Kasten via helm, using the existing nginx ingress class on the cluster:

helm repo add kasten https://charts.kasten.io helm install k10 kasten/k10 \ --namespace 'kasten-io' \ --create-namespace \ --set "ingress.create=true" \ --set-string "ingress.class=nginx" \ --set "auth.basicAuth.enabled=true" \ --set-string "auth.basicAuth.htpasswd=veeam:{SHA}jIRWj6Rhdep75BYFQaz0FSjZk60="Note this will set the Kasten K10 user and password to veeam / kasten

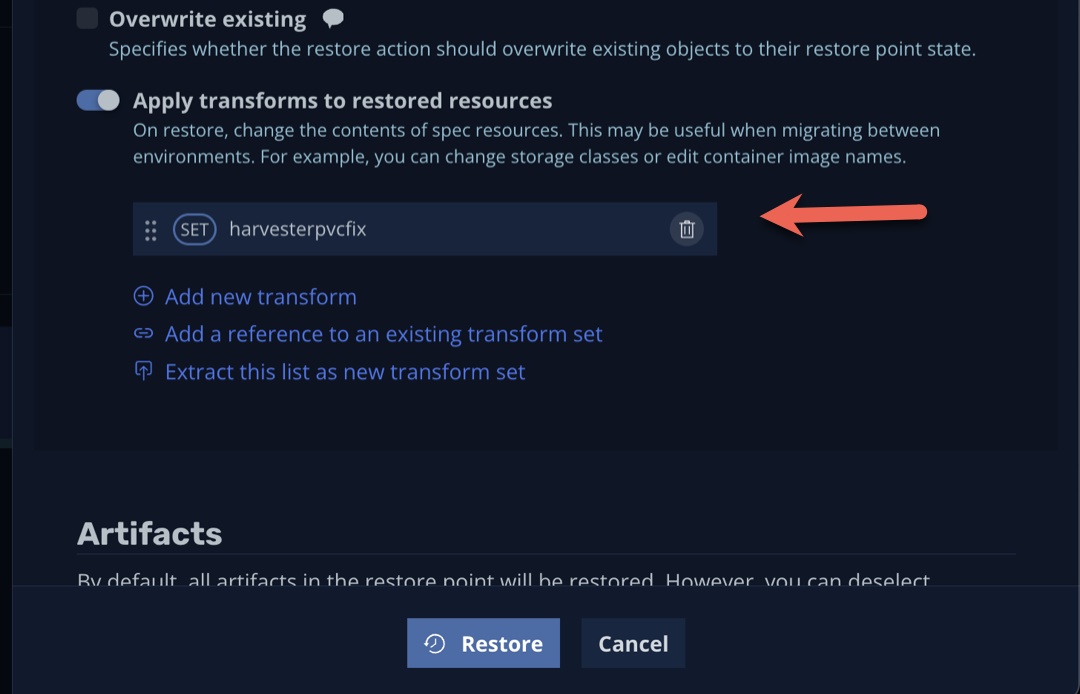

- Create a TransformSet to remove the harvesterhci.io/owned-by annotation from VM PVCs so VMs can be restored onto the cluster.

cat <<EOF | kubectl apply -f -

kind: TransformSet

apiVersion: config.kio.kasten.io/v1alpha1

metadata:

name: harvesterpvcfix

namespace: kasten-io

spec:

transforms:

- subject:

resource: persistentvolumeclaims

name: harvester_owned-by

json:

- op: remove

path: /metadata/annotations/harvesterhci.io~1owned-by

EOF

When you restore a VM, ensure that you select the harvesterpvcfix transform to ensure the VM can be restored and booted successfully in Harvester:

And that’s it! You can now protect Harvester VMs using Veeam Kasten! Stay tuned for official support announcements!